My favourite word from Bett 2024: Grounding

Every year at the same time as BETT, the major Edtech exhibition, Microsoft UK always invite their product teams over from the US. The US team run a series of compelling seminars on the latest Edtech solutions, as well as taking the time to meet with customers and partners before and during the show.

One of the Microsoft pre-Bett sessions this year was a “Copilot deep dive.” As Copilot seems to be trending on all the channels – Matt Dunkin, our COO, went to take a look.

What is Microsoft Copilot?

Microsoft Copilot, previously known as Bing Chat (or earlier Bing AI) is an AI chatbot similar to ChatGPT, and useful when searching for information. According to Microsoft, Copilot “combines the power of large language models (LLMs) with your data in the Microsoft Graph and the Microsoft 365 apps to turn your words into the most powerful productivity tool on the planet.”

How does Copilot work?

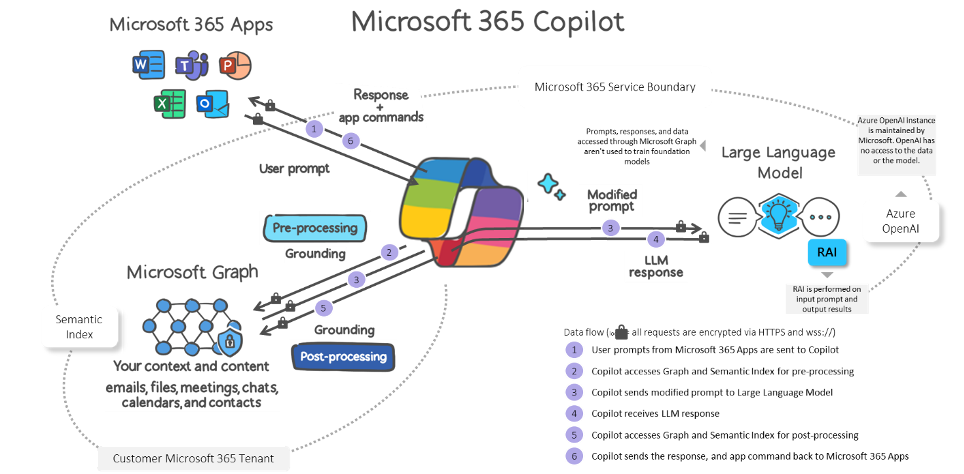

Copilot is integrated into Microsoft 365 in two ways. It works alongside the user as it is embedded in all the well-known Microsoft 365 apps— Word, Excel, PowerPoint, Outlook, Teams and more to, in their words, “unleash creativity, unlock productivity and uplevel skills.” Copilot also includes a Business Chat which works across the LLM, the Microsoft 365 apps, and user data, such as calendars, emails, chats, documents, meetings and contacts. Users can ask natural language prompts, like “Tell my team how we updated the product strategy,” and it will generate a status update based on the morning’s meetings, emails and chat threads.

The session I attended presented lots of detail about Microsoft Copilot, as well as Copilot in Education. It also featured several use cases and discussed Copilot extensibility. But the highlight for me was the introduction of “grounding.”

What is grounding?

Standard Copilot is effectively “grounded” to Bing.

Copilot for 365 is grounded to your Microsoft 365 tenant and it uses data that users have access to. Immediately this makes you very aware of the need to manage your data properly. Security, data lifecycle and information protection suddenly become very relevant. Simply putting Copilot for 365 in a poorly managed tenant will produce bad results. The phrase “garbage in, garbage out” instantly springs to mind.

To further make the point about grounding, during the extensibility section of the session, the ability to create your own tailored Copilot was reviewed using Copilot Studio. It was suggested that “Copilot For AspiraCloud” could be a thing and could be grounded to the AspiraCloud website. It’s a much smaller dataset but I wouldn’t guarantee that every page is 100% accurate. Copilot for AspiraCloud wouldn’t know any difference, as it is relying on that grounding.

Moving forward, I will fall back on this new word when speaking to colleagues, customers, and prospects, specifically about the importance of data quality. Not everyone will be looking at Copilot right now, but that doesn’t mean that you shouldn’t start taking a closer look at your Microsoft 365 tenant. Because if you aren’t managing your data now, the problem is only getting bigger.

Finally, there are a few different versions of this diagram but this one clearly shows the apps, Copilot, the grounding (data) and the LLM (ChatGPT) working together to deliver the experience in Microsoft 365.